建議使用 uniq()

在 ClickHouse 中很常用又很相像的兩個 Aggregate Function: uniq() 和 uniqExact()

其實 uniqExact(x) 就等同 COUNT(DISTINCT x),若是使用 COUNT(DISTINCT x) 會看到欄位名稱直接被轉成 uniqExact(x)

官方也有提醒:「除非一定要最精確的數字,否則建議使用 uniq」,因為 uniq 可以最佳化 memory 的使用。

以下是官方文件的描述:

uniq(x [, …])

Calculates the approximate number of different values of the argument.

計算輸入參數的不重複的大約數量。

參數可以是

TupleArrayDateDateTimeString- numeric types.

Returned value

- A UInt64-type number.

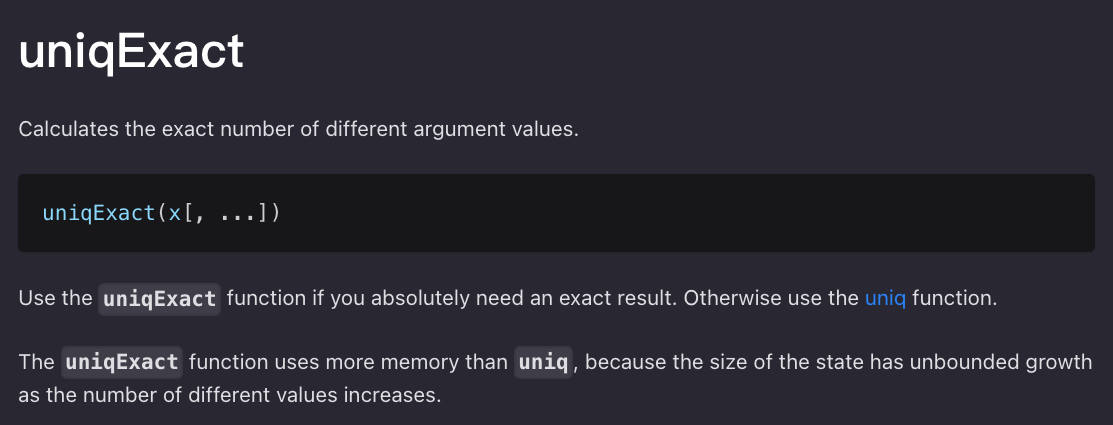

uniqExact(x [, …])

Calculates the exact number of different argument values.

計算輸入參數的不重複的精確數量。

可接受的參數和回傳的值跟 uniq 是一樣的。

參考資料

歡迎追蹤我的 IG 和 Facebook